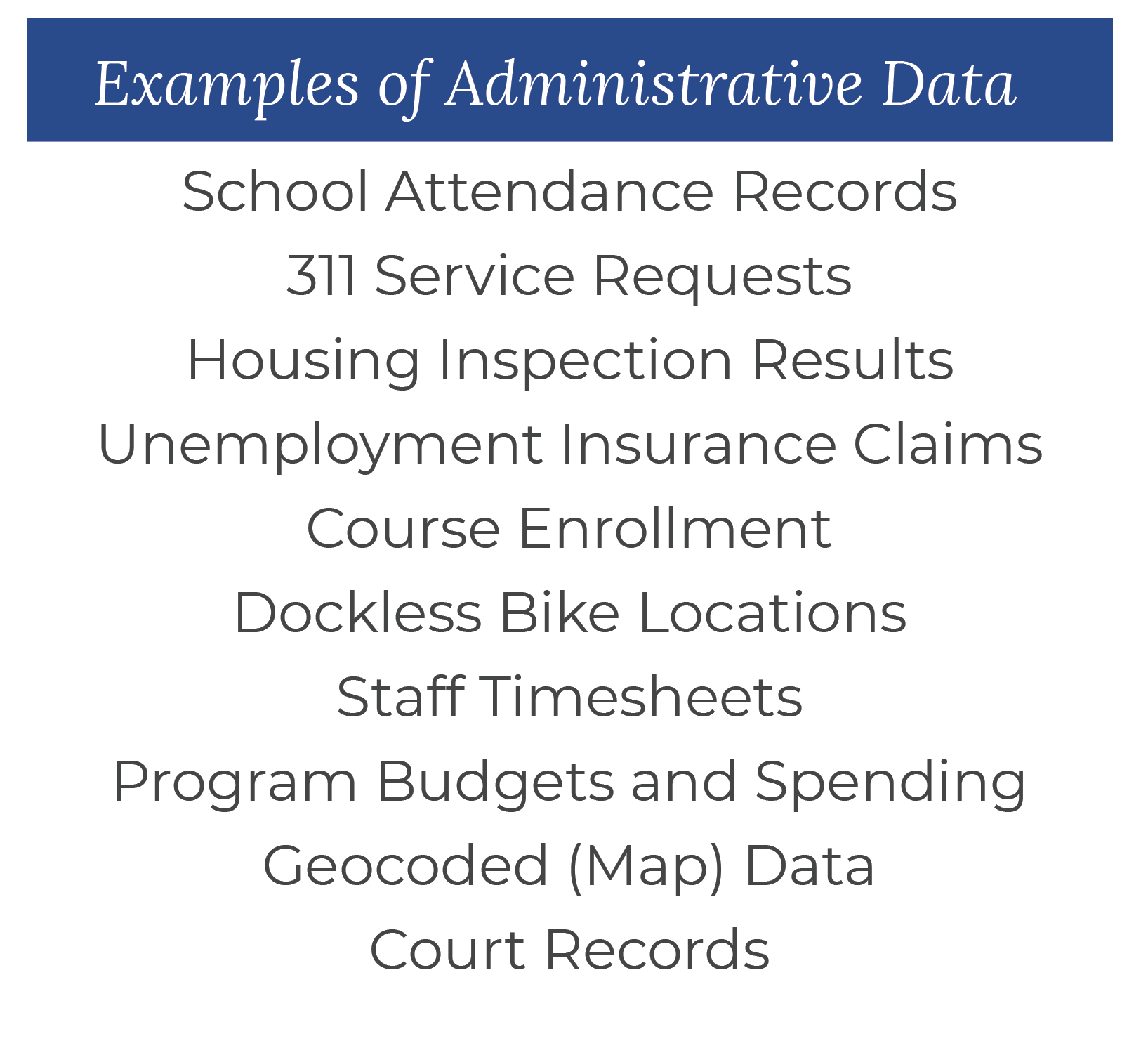

Administrative data analysis involves taking data that might have always existed in a program’s database—called administrative data—and using it to generate a better understanding of a program or policy. We hope these insights then inform the path forward for a new program, policy, or change within an existing program. There are several ways to use administrative data to learn about programs and policies. Broadly, (1) describing what’s happening during the program and (2) trying to say whether the program works.

Let’s explore both of these with a hypothetical example. Suppose a DC government agency enlists a community-based organization to run an after-school tutoring program for students who are struggling with their coursework. The agency is interested in using existing data to answer important questions about how the program has been working so far.

What can we learn through descriptive analysis?

We often start with one type of administrative data analysis: descriptive analysis. Descriptive analysis means answering some questions “describing” the program through basic statistics, like How many people does the program serve? What is their average age? Where do they live? and so on. We often use these basic counts, averages, and tabulations to determine which aspects of a program we should investigate further. As we start digging into the data, we come up with new questions and new insights. We go back and forth between questions and analysis because of what we learn, but is very important to have a good idea of the overall goals of your analysis before you start, otherwise it’s easy to fall down a rabbit hole of data!

In the example of the after-school tutoring program, we might start with basic questions about who’s being served. Then we’ll dig deeper into what’s going on during the program and ask, How often are students meeting with tutors? or What subjects are they working on with their tutors? Sometimes the data we care about is in our dataset. When it’s not, we think about how important it would be for us to have the data, then work with agencies to see if they have the data (and are willing to share it) or if it’s worth collecting new data.

An illustration of how a Lab data scientist might approach an administrative data analysis project on an after-school tutoring program.

We can get pretty far with descriptive analysis. It’s a great starting point. Even when we’re setting up a randomized evaluation or predictive model, we want to know what type of work a program is doing and whom it is serving. But, descriptive analysis cannot tell us whether a program is working. For that, we need something a little more powerful.

Can administrative data analysis tell us whether a program or policy is working, or will work in the future?

Often we want to know whether a program works. These are what we call questions of “causality,” meaning does the program cause changes in the things (outcomes) we care about. A key to answering questions about “whether a program works” is being able to compare what happens with the program to what happens without the program. We’re trying to compare two groups that are as similar as possible—except for the presence or absence of the program.

Ideally, we would conduct a randomized evaluation since this method allows us to say with the most amount of certainty that our groups are similar and that a program worked. For feasibility, political, or ethical reasons, a randomized evaluation is not always possible. When randomized evaluations are not possible, we can use administrative data analysis to get a peek at whether a program works, but without as much certainty that the program really caused the changes we’re seeing.

To answer causal questions with administrative data, we look for a naturally-occurring situation where there are two nearly-identical groups but one of them is in the program and the other is not. Sometimes these situations occur because of how the program is administered. With these two groups, we’d do what is referred to as a quasi-experimental evaluation (sometimes referred to as a quasi-experimental design or “QED”). QEDs can take different forms, but they share the same goal: find or create through data a situation where there are two almost identical groups, but where only one group is influenced by the program or policy of interest.

Let’s return to the tutoring example. Suppose the community-based organization had a limited number of tutors and schools used grade point average (GPA) to refer students to tutoring: students with a 1.9 GPA (below a C) were referred to tutoring; students with a 2 (a C or higher) were not. To measure how well the program worked, we would ask, are students who ‘just make’ the cutoff to the program (e.g., those with a 1.8 or 1.9 GPA) very similar to those who ‘just miss’ the cutoff (e.g., those with a 2.0 or 2.1 GPA)?

If we could make that argument—using the data and common sense—then we might compare the subsequent school performance of these two groups to understand the tutoring’s effect on school performance. Using this type of cutoff is what’s called a regression discontinuity design (RDD). RDDs are a reasonably strong way of answering causal questions with administrative data, but our confidence in what we’re finding is limited to people just around the cutoff.

In other cases, the program doesn’t have that sharp cutoff between groups and we need to find another way to identify similar groups. For a QED with matching, we would pair up each person who participated in the program with one or more people who did not participate, but who looks similar in ways we can measure. For example, for each student in tutoring, we would look in our data for a student who did not participate but who looks similar on things prior to the tutoring program being offered, like grades, standardized test scores, attendance, etc. We’d then compare the outcomes of the matched students.

This method, QED with matching, helps us get a better idea of how well the tutoring program is working, but we would be less certain that what we see is due to the tutoring than if we used an RDD or randomized evaluation. For instance, the students who are willing to seek help for their academic difficulties might have improved their performance regardless of the tutoring program, but there’s almost never anything to show student motivation through data, so we don’t know if we’re matching students with similar levels of motivation. We’re using a particular kind of matching—a synthetic control design—to understand whether a new program, the Crime Gun Intelligence Center (CGIC), is helping to reduce gun crime in DC.

What ethical questions do we face when we use administrative data to learn about programs?

The same benefits of administrative data analysis—we use data already-generated about a program to learn about how its working—can lead to ethical questions as we adapt that data to answer our questions.

A first concern arises from the fact that we’re looking backwards at what happened in the past. The things we learn from administrative data analysis—no matter how sophisticated our statistical code—are limited in how much they can predict what will happen in the future.

Second, one huge benefit of leveraging existing administrative data for learning is that the data was created as part of a program’s usual operations, and can be accessed immediately. This benefit is also a risk. We need to be honest that this data was not created for research purposes. What front-line staff may note in the data for their own purposes, may require additional judgement calls from us when we analyze it. For instance, if the data on income is missing for an individual—do we interpret that to mean that they have no income or that we just don’t know their income?

This is where the work we do with our agency partners to understand the gaps is so important. Then, when we report our findings, we do our best to be transparent about these limitations and work with our agency partners to frame our conclusions recognizing the data’s imperfections. Finally, through practices like collaborative code reviews and a commitment to sharing non-sensitive code on Github for public review, we will always choose to spend more time on a data processing task if it means getting as close to the right answer as possible.

Last, but certainly not least, privacy is a concern—especially when we’re putting together data from multiple sources. Residents may know that the workforce development centers have their employment and earnings records and the Medicaid office has data on their health history. But, residents might not want their job counselors to know about any health issues they are facing. We’re mindful of resident privacy when we do our analysis—e.g., removing names and identifiers from the data we’re working with—and report information only about groups of people, not individuals. We also have strong protections in place to make sure that the data are protected when it’s shared with us, when we use it, and when we dispose of it.

How does The Lab use administrative data analysis?

Now that you’ve read about administrative data analysis, check out how we’re using the technique to answer these questions for Washingtonians:

- How can looking back on a youth diversion program inform its future?

- What does crime look like in DC?

- How do the District’s employment services support people experiencing homelessness?

- How can we use data to make DC's criminal laws more fair?

- Are dockless bikes the right addition to the District's transportation options?

- What are DC residents’ main housing challenges?

- Does better data from gun crimes have an impact on criminal justice outcomes?

- Can person-centered support help residents achieve economic security?